When it comes to Docker, we can easily say that is a platform that has its downsides, but it also comes with many advantages. In this article, we will dive a little deeper on what are Docker’s advantages and what makes it such a handy platform to learn and to adopt.

If you already are into DevOps, then you know Docker is an open standard platform for building, exporting, and running applications. Alternatively, if you are a newbie, we can say that Docker may be also seen as a program that takes a systematic approach to solve common software problems and simplifying user experience of installing, running, publishing, and removing applications. Pretty basic, right?

Well, first let’s have a look at Docker’s concept. Initially, Docker was created to work on the Linux platform but has developed to offer greater support on non-Linux operating platforms, even for MAC OSX and Microsoft Windows. Therefore, before Docker was a platform on its own that makes it easier to create, deploy, and run virtualized application containers on a common Operating System (OS) with its own ecosystem of allied tools, it was a support platform. And this takes us to one of its first advantages: not only Docker is written in the Go programming language but it also takes several features of the Linux kernel to deliver its functionality. So Linux enthusiasts, programmers and developers of all kinds or everyone struggling with application delivery, your prayers have been answered – yes, Docker provides the ability to package and run an application in a loosely isolated environment called a container.

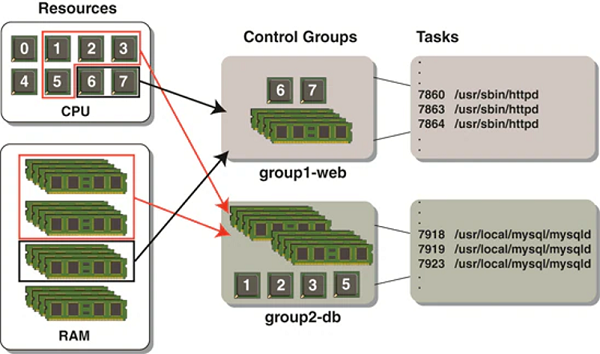

Because Docker uses a technology called namespaces to provide the isolated workspace called the container, when you run a container, Docker creates a set of namespaces for that container. These namespaces provide a layer of isolation. Each aspect of a container runs in a separate namespace and its access is limited to that namespace. So how does this translate into more advantages for you?

High fidelity across multiple environments

If you are familiar with the expression time is money, here is why Docker is so great at saving time. One of the most frequent issues when developing applications is the unpredictable things that may come from the differences between Production, QA, and Development environments. This is also the case with many different machines that are used on a project. Because code pipelines may have many moving parts, just a small difference in one of the environments can trigger an unending race for figuring what causes what and a time consuming task to solve a problem.

Using Docker images, that are invariable, you can achieve consistency in environments from Dev to Production, so that your code gets through QA and Production without many complications. What is more, images can also help with an organized approach to incremental updates as you can create a new and modified image based on an existing image. So you will also see an increase in productivity since various teams working on a project can use the base container image to download and create new container instances. In addition, you will observe that the amount of time it takes from build to production will reduce notably.

-

Rapid Deployment

Speaking about time, what would you say about rapid deployment? Sounds legit, right? Here’s how Docker works: think about you’d like to stop juggling with virtual machines’ abundant tasks and not feel too overwhelmed by them. I am not saying you should not learn about VM also, but it would be much easier for you if instead of using a VM, basically an entire operating system, to cut down the "unnecessary" components of the virtual OS to create a smaller version of it.

This means using a Docker container that does not require a separate operating system. In another words, a container is a way to run applications that are isolated from each other. Rather than virtualizing the hardware to run multiple operating systems, containers rely on virtualizing the operating system to run multiple applications. This way you can run more containers on the same hardware than VMs because you only have one copy of the OS running, and you do not need to allocate in advance the memory and CPU cores for each instance of your app. So you should be faster and more efficient than with the VMs. As a result, containers have become a popular option for deploying applications into the cloud and remote data centers. Therefore, you get the job done and in the same time, put some money aside.

-

A better security

Another important issue to be taking into account when it comes to applications is security. Because in Docker every container that is created has its own resources and each container runs independently, all applications and services that are running inside the containers are also completely independent from each other. Which means one container cannot poke into another container. That is, if something goes wrong with one container, it will only affect the data available in that container without affecting the rest of the containers.

However, you should keep in mind that even though Docker is quite safe, you still need to have a team of DevOps specialists to be able to come up with customized solutions for extra security. When adding workloads to a dynamic Docker environment, that comes with multiple moving pieces within a large-scale project, so it may become a challenge to monitor them.

-

Improves teams collaboration and customer experience

Everyone knows that a better collaboration across teams and an improved customer experience translates into increased revenues and profits. Docker allows you to segment an application, so you can refresh, clean up or repair without taking it down. Even more, you can also create an entire architecture for applications that require small processes and communicate with each other through APIs. So developers can take it from there and work together to solve any potential issues. Another advantage is straightforward maintenance. When an application is containerized it is isolated from the other apps working in the same system. Because the applications don’t mix with each other it’s easier to manage and it lend itself to being automated.

-

Docker support agile and DevOps

The idea of DevOps came up in 2009 when Patrick Deboi was searching for a solution that would put an end to the feud between developers and sysadmins. But this wasn’t the real reason!, Debois’ goal was to minimize the time and cost of building software. This is why one of the main and most important advantage of implementing DevOps is actually to constant improve development and operations to increase profitability.

If your teams of engineers would adopt a DevOps model, they would use a lot of automation in their development cycle. Docker provides a series of advantages here, since working with a containerized application offer a better understanding of what you do. This way you can focus to allocate dedicated teams instead of skills-centric silos.

-

Conclusions

In conclusion, what a DevOps technology does is to manage an entire workload – like software development, QA, IT operations, like reviewing, testing, deploying, etc. - while maintaining an agile perspective of the workflow. This result in shorter development cycles and increases efficiency because DevOps enables teams to react fast and to ensure reliability during peak times. That’s why developing a comprehensive strategy focused on scalability, reliability and continuing growth is important not only in every team but in every organization.